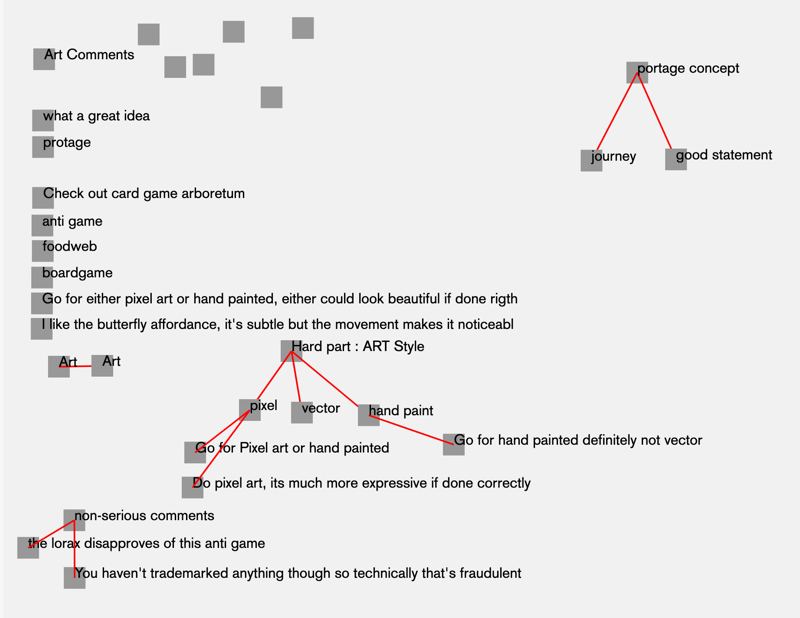

This testing session was carried out with year 3 Games Design & Art. This test was using nodenogg.in version 0.0.24d (YouTube explainer). The students were presenting a series of potential game ideas to each other, some of them in teams. The format for each was a 12 minute presentation of their 3 game ideas with accompanying slidedeck. The other students used a nodenogg.in instance to comment as the presentation was happening and afterwards for a few brief moments. The one main question I posed to each team to outline that could be answered collectivly via nodenogg.in was what is there biggest struggle issue? For example choosing which game idea to go forward or another aspect that the “crowd” could help on. In reflection from this session I think a more focussed use of nodenogg.in on the question at the end could have worked better as I noticed that contributing live specifically with the spatial view only on show was much harder, the cognative load was high, to listen type and see spatially. This was in part to removing the list view, this again gives rise to the idea of a series of views that work better for different types of sessions for using nodenogg.in. Although some of the connections and such started to be draw as seen in this screenshot, even with the shortcuts, the crowd couldn’t think that fast.

Also I felt much more this time as I was involved in thiking and responding during this session as well I think I miss some of the issues students hit with nodenogg.in. In future I need to either be recording the session in a way that is useful for my own reflection or get another staff member to use the tool in a perscibed way with students while I just observe the use. We have a big session set for the end of January which I need to prepare the use case / cases so I can gain the most useful feedback from this as much as possible. This will likely include blocking in some time to get students to write feedback into discourse. May need to use Microsoft Forms as discourse is public and students need to join to complete, which is a barrier for sure

This testing has shown that the spatial view is a slower thinking space, which needs to be coupled with a quicker throw thoughts into a bin exercise.

For this work we first need a bucket collect mode. Then we move into a spatial view where these are first neatly arranged1 and then facilitate a spatial process on the ideas, discarding some, clustering some, connecting some and making new informed and more details inputs into the spatial view.

The spatial view does needs to trim up the text, but there has to still be the ability to glance at the information and arrange as having to keep opening a reader view may be too slow even in the spatial mode.

For now I’ll call these two actions modes, Bucket mode and Consideration mode.

Main take aways

- Bucket mode to be turned on.

- Reader view needs some work.

- Gathering more feedback in sessions is really important.

Some type of initial auto placement. based on entry time perhaps?↩